Biases about bias

- henrylfraser

- Oct 11, 2021

- 5 min read

Updated: Oct 27, 2021

Picture a machine learning system that relies on crowdsourced data labelers to help rank music recommendations. Is the system vulnerable to bias, on the basis of the fact that labelers may exhibit differences in how they label? The answer depends on many things, but one of them is who you are asking. Bias means different things to different people. The other day I watched a very interesting discussion along these lines between a colleague from a law background (Jake Goldenfein) and a colleague with an ML background (Danula Hettiachchii). It seemed like my colleagues had fundamentally different ideas about bias. In law, humanities and social sciences, the term bias generally has perjorative associations and involves value judgments - some kinds of 'bias' may be improper or even unlawful. In STEM disciplines, the term tends to have specific, value-neutral definitions. If there are to be productive conversations across disciplines about bias, it will be necessary to come to some accommodation about terms!

There are at least 4 ways to understand the term, 'bias' in the context of machine learning systems. Bias has other meanings in the theory of probability, and in statistics (neither of which is perjorative). But let's focus on 4 definitions that are most relevant to machine learning and automated decision-making with real world consequences:

Bias in the data-science sense of a systematic measurement or sampling area, where bias describes how far off on average the model's predictions or classifications are from the truth.

Cognitive bias: in the Tversky / Kahneman sense of human beings using faulty heuristics or following predictable patterns of mistaken judgement (availability bias, confirmation bias, wishful thinking etc.)

Bias in the sense of prejudice or a failure of impartiality on the part of a decision-maker.

A biased outcome or trend in decision-making that unfairly favours one group over another (which includes a subset of bias, where the outcome unfairly disadvantages a group of the basis of protected characteristics such as race or gender).

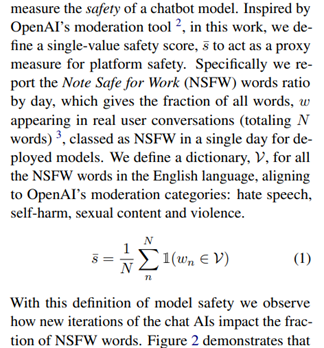

Any or all of 1-3 may cause 4. We might use the word ‘bias’ loosely, in sense number 4, to describe the unfair consequences or implications of a prediction error (where the prediction error is equal to the sum of the bias (in the sense of 1) and variance of a model).

Source: https://datacadamia.com/

Cognitive bias (2) may cause prejudice (3). For example, affinity bias (a tendency to be more favourably disposed to people who are like us, rather than different from us) may be a cause of prejudice.

By the same token, the first kind of bias (measurement or sampling or prediction error) may be caused by any or all of 2-4. The sampling and labelling of a data set is influenced by the subjectivity of the data wrangler / labeler - her cognitive biases, habits, or prejudices - and by patterns of unfairness in human affairs. That seems to be the point of this blog post, which describes a range of different causes for 1 (but like so many other pieces is confusing about whether bias means unfairness or sampling error or both).

Another point of confusion is the fact that bias in the data-science sense (1) is assessed after the fact, according to how far off, on average, the model output is from the ‘truth’. This is where it gets difficult. In some cases, say a model that predicts how many goals football teams will kick, truth is not hard to get at. Once the teams have played a game or a season, or a tournament, you can, with confidence, draw a curve representing truth (how many goals the teams did in fact score) and one representing the model’s predictions, and describe the average difference as ‘bias’.

In other cases, trying to work out bias or variance in relation to ‘truth’ involves a value judgment. Or rather, working out what should stand in as the proxy for truth involves a value judgment that is subject to bias in the sense of 2 or 3. This would seem to be the case, say, for music recommender systems. Truth is some approximation of the preferences of the user, but it’s pretty hard to quantify exactly the value that a user derives from a song; and there are different optima one might aim for. Maximum engagement? A user feeling challenged? A user being emotionally moved? A perfect balance between familiarity and novelty?

On top of that, if you are relying on crowdsourced labelling to train a supervised ML system, understandings of whatever is standing in for truth may differ (as a result of bias in the 2nd or 3rd sense or simply as a result of difference in labeler perspectives). The result is that you get bias in the data-science sense (1) of sampling error – or at least inconsistency in labelling, and that inconsistency may in some circumstances lead to unfair outcomes (4). This seemed to be where my ML colleague was focused.

My law colleague, on the other hand, was uncomfortable with the assumption that there was a ground truth against which to assess the biases of labelers; and with the use of a loaded term like 'bias' to describe deviations from a chosen proxy.

In other cases still, you get complicated mixes of different kinds of bias with implications that aren’t always what they seem. Take a model that classifies photos of skin lesions as cancer (or not). After the model has been applied to an image, doctors can take a biopsy of the lesion and confirm or disconfirm the classification. But the biopsy test will presumably have some false positives and false negatives, meaning that the measurement of the model bias against 'truth' isn’t straightforward.

Things get even more confusing if we know that the degree of classification error by the model is much higher for dark-skinned people than for light-skinned people. What if it is so inaccurate on images of dark skin that it may not safely be used for dark skinned patients? On its face, the system would appear to be biased in the sense of 3 (prejudice) and 4 (unfairness). The benefits of accurate classification are not enjoyed equally, and are distributed according to skin colour. The outcome is that dark-skinned people get worse medical treatment, or are more likely to require a biopsy than a light-skinned person, for whom it might be possible to come to a diagnosis without the need for that surgical intervention.

But what if it turns out that this apparently unfair outcome cannot really be ascribed to 3 (prejudice)? Perhaps the reason the model doesn’t work for dark skin is that dark-skinned people get skin cancer at a far lower rate than white-skinned people. Perhaps there simply isn't enough data to train the system on. If that were true, there would be bias in the sense of a sampling error due to lack of relevant data (1), leading to a differential rate of prediction / categorisation errors as between dark and light-skinned people. But there would not be bias in the sense of discrimination or prejudice (3), even though in general terms we might think it is unfair (4) that dark skinned people are disadvantaged with respect to the diagnostic tools available to them. Then again, we might blame prejudice (3), for the fact that more effort hasn't been put into gathering sufficient data for dark-skinned patients.

The point is... there are many sources of possible disagreement about whether a machine learning system is 'biased'.

Perhaps all of this is elementary in data science or in the literature on fairness of automated decision making. But, speaking for myself, I am often confused by how scholars of automated decision making from different disciplines use the term 'bias' and wish we could converge on clearer definitions across disciplines.

Comments