Regulating AI with dynamic definitions

- henrylfraser

- May 10, 2021

- 6 min read

Updated: May 24, 2021

A basic problem in the regulation of technology, and especially of artificial intelligence is the problem of definition. There are many definitions of AI and the term 'AI' is used to describe many different technologies that look different and do rather different things. An expert system that automates tax accounting, a self-driving car, and a facial recognition system that unlocks your phone are all 'AI'.

Lawmakers don't have the luxury of being lax in their definitions. They can't just assume that we know AI when we see it because, as AI and law researcher Jonas Schuett puts it,

Every regulation needs to define its scope of application. It determines whether or not a regulation is applicable in a particular case. From the regulatee’s perspective, it answers the question: "Do I need to apply this regulation?"

A regulation that regulates AI needs to define AI and it needs to do it well.

A new European regulation

An overbroad definition increases the risk of regulation that extends to too many technologies; an overly narrow definition may result in a failure to capture and address pressing harms. Schuett recommended a 'risk-based' approach to defining and regulating AI (he's not the only one). I expect he's pleased to see that Europe's new proposed AI regulation (I'll call it the 'Proposed Act') adopts this approach. The Proposed Act only regulates 'prohibited artificial intelligence practices' and 'high risk AI systems', avoiding regulatory burden for risk systems .

But the Proposed Act also does something else quite interesting with its approach to definition: both in defining 'Artificial Intelligence' as a term, and in defining the scope of the regulation based on risk. In both instances, rather than using a long, prosey chunk of technical legal language to generate exhaustive definitions, the Proposed Act uses a technique that a lawyer might call 'incorporation by reference'. As I learned from my colleague Pat Brown in my time at Treescribe, a software developer might describe the same technique as using 'layers of abstraction'.

Here's the definition:

'artificial intelligence system' (AI system) means software that is developed with one or more of the techniques and approaches listed in Annex 1 and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with.

This definition has what lawyers would call two 'limbs'. In plain English that means that two things have to be true for something to be considered an 'artificial intelligence system' for the purposes of the regulation. Let's deal with the second limb first. Loosely speaking, being able to generate outputs to influence its environment in pursuit of a human-provided objective is a necessary, but not sufficient, condition for a system to be considered an 'AI system' (for the purposes of the regulation, anyway). The list of outputs is illustrative rather than exhaustive - meaning that the definition is open-ended: outputs might be of different kinds, and they might be of kinds that we haven't yet contemplated.

The other necessary, but not sufficient condition (the first limb) is simple: the system must be developed using one of the techniques on a specified list. The list, in Annexe I of the Proposed Act, is currently really quite short and essentially includes: machine learning, reasoning / logic based systems, and statistical / Bayesian methods.

From the point of view of legislative drafting technique, which must solve the technical problem of how to specify the right things to regulate, what is interesting is not what's on the list, but rather the way the list is used. Incorporating a list by reference is an elegant solution to the problem of defining something that is in a state of constant change and development.

Firstly, it makes the definition flexible in the face of change. A recent proposed UK regulation of Internet of Things security takes a similar approach to defining IoT devices (incorporation by reference of a list) and the accompanying policy document even goes so far as to state explicitly that this is done to ensure the definition is 'adaptable'. Why does this technique create an adaptable definition? Because you can update the list. Rather than requiring amendment by a parliamentary Act (an involved process that places demands on limited parliamentary time), the list can be amended by executive fiat. The European Commission may exercise administrative power under Art 4 to amend the list of techniques, so as AI methods change and develop, the definition can keep up.

Secondly, the incorporation by reference technique keeps the definition short and relatively simple. It is therefore less likely to generate uncertainty and ambiguity. Using a list removes the pressure to try to capture a whole range of different and detailed practices in unsuitably broad, generalising language in the operative provisions of the regulation. By contrast, the list (or whatever else is incorporated by reference) can get as detailed as you need it to be, while the description of what makes something an AI system remains simple (is it on the list? does it generate outputs in its environment in response to given objectives?)

What about the Proposed Act's approach to scope? It prohibits certain uses of AI in short order. The prohibition takes up a single article (Art 5) of an 85 article document.

Then the rest of the Proposed Act is concerned with setting out strict compliance rules for 'high-risk AI systems'. That term is not defined, but the way the classification is made is basically again through definition - only in the operative section of the document, rather than the definitions section. Again, the approach is to use a definition with two elements, but in this case satisfying either element is sufficient to classify a system as high risk. So if a system meets the criteria in the first element - basically, is it a 'safety component' of a product or system? - it's high risk, and it's regulated. The second element is again: is it on the list? If it is on the list, it's regualted. The list annexed to the Proposed Act, and incorporated by reference. This list is rather longer than the list in Annexe 1, and includes Biometric ID systems and employment / recruitment AIs among many other kinds of system. But once again, the list can be updated reasonably easily by the Commission, without requiring an Act of parliament.

In short, the two limbed approach to defining AI systems in the Proposed Act strikes a balance between specificity and flexibility by using the legal drafting technique of incorporation by reference alongside direct drafting.

Layers of abstraction

This technique, as I mentioned above, bears a strong family resemblance to the software development practice of using 'layers of abstraction'. My colleague Pat Brown and I have recommended that lawyers should use this concept more often to make contracts more readable. Having reflected more on this meta concept, I think it is useful in the regulatory context as well.

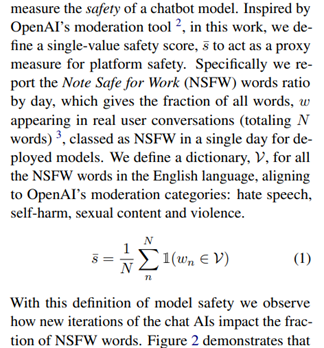

First, though, let's clear up some terminology. A layer of abstraction is essentially software or code that intermediates between a high level request or command, and the low level operations needed to fulfil that request or command. For example, the graphical user interface on your computer (what you normally see when you switch your computer on) is a high level of abstraction. The lowest level of abstraction is binary code. We are all familiar with using CTRL + S to save a file. This command operates at a high level of abstraction, across multiple file types. In context (for a PDF, or image or text file or whatever) it is translated into the relevant programming language, and ultimately through several layers into binary which stores the file to memory. It would be a nightmare if we had to specify all the exact operations required to save a file every time. Layers of abstraction, with the CTRL + S command at the top layer, solve this problem.

[Source: Scimos]

Layers of abstraction also permit coders to deal with different components of software in a modular way. We can change and adjust operations at lower levels of abstraction, while keeping the highest level commands the same. If all the operations were included in the top layer of abstraction, this would be impossible.

If we are going to regulate complex and evolving systems, like AI, we should be encouraged when we see parallels with software development techniques for managing complexity. After all, legislation is nothing more than an abstracted set of commands for operations we want multiple agents to perform as befits their context. It is promising to see lawmakers making the use of the helpful meta-concepts at their disposal in defining AI and the scope of AI regulation.

Let's do more with this

Remember, we aren't limited to lists and annexes when it comes to incorporation by reference. We can incorporate a table, a URL, a google doc, even a blockchain ledger or database: all of which change, update and evolve in different ways. We should be on the lookout for other and better ways to apply software development insights to the technical legal tools used to regulate technology.

Comments